Table of Contents

If you’ve ever tried to translate a video and ended up with awkward lip sync, robotic voices or off-time subtitles, we know how you feel. Most tools can translate and dub, but very few can make your content feel like it was recorded in the target language to begin with.

That’s where AI video translation with lip sync changes everything. By combining voice cloning, lip syncing and subtitle alignment, you can now automatically translate and localize your video content so it sounds real, looks right and connects to people across different languages.

In this guide, we’ll show you how to translate videos with lip sync that audiences actually want to watch. It doesn’t matter whether you’re making marketing clips, training materials, or creator content for a global audience, this is how to do it right. We’ll also break down how Vozo’s AI video translator delivers natural, studio-quality results (without the studio or the time!).

Why Lip Sync Matters in Video Translation

When the lips don’t match the voice, it instantly makes your content feel fake. Your viewers are much more likely to stop focusing on your message and start noticing the disconnect, which means you’re less and less likely with every passing second to turn them into fans or customers.

That’s why so many creators now translate video with lip sync. Unlike basic dubbing or subtitles, syncing lips directly to speech creates the feeling that the speaker is actually fluent in the target language. This subtle change builds trust, keeps the viewers’ attention and removes the friction of watching video in a different language.

Across industries, the benefits are huge. Global brands can translate videos with lip sync to run localized ads that appeal to people in their own language and culture. Educators can deliver training in multiple languages without remaking their video content. Influencers, filmmakers and even enterprise support teams can use video translation to connect with a global audience without losing authenticity.

The Challenge of Translating Talking Videos

To translate video with lip sync, you need more than just words in a new language. You need timing, tone and natural motion that match the speaker’s intent. Most basic video translators feel “off” because they miss the emotional layer. If you’ve ever watched/listened to flat dubbed audio, awkward pauses or voices that don’t suit the face on screen, you’ll understand.

Even with accurate translation, things often go wrong. Subtle facial expressions don’t quite line up. Lip sync is off, the speaker’s accent feels mismatched… And if there’s text in the frame, it might stay untranslated, breaking the illusion of a real-life performance.

That’s what makes AI the best way to translate video with lip sync. The tool needs to understand who’s speaking, assign the right voice clone, match emotional tone and align lip movements, all in a seamless, believable way. Without that precision, your message can very easily get lost in translation.

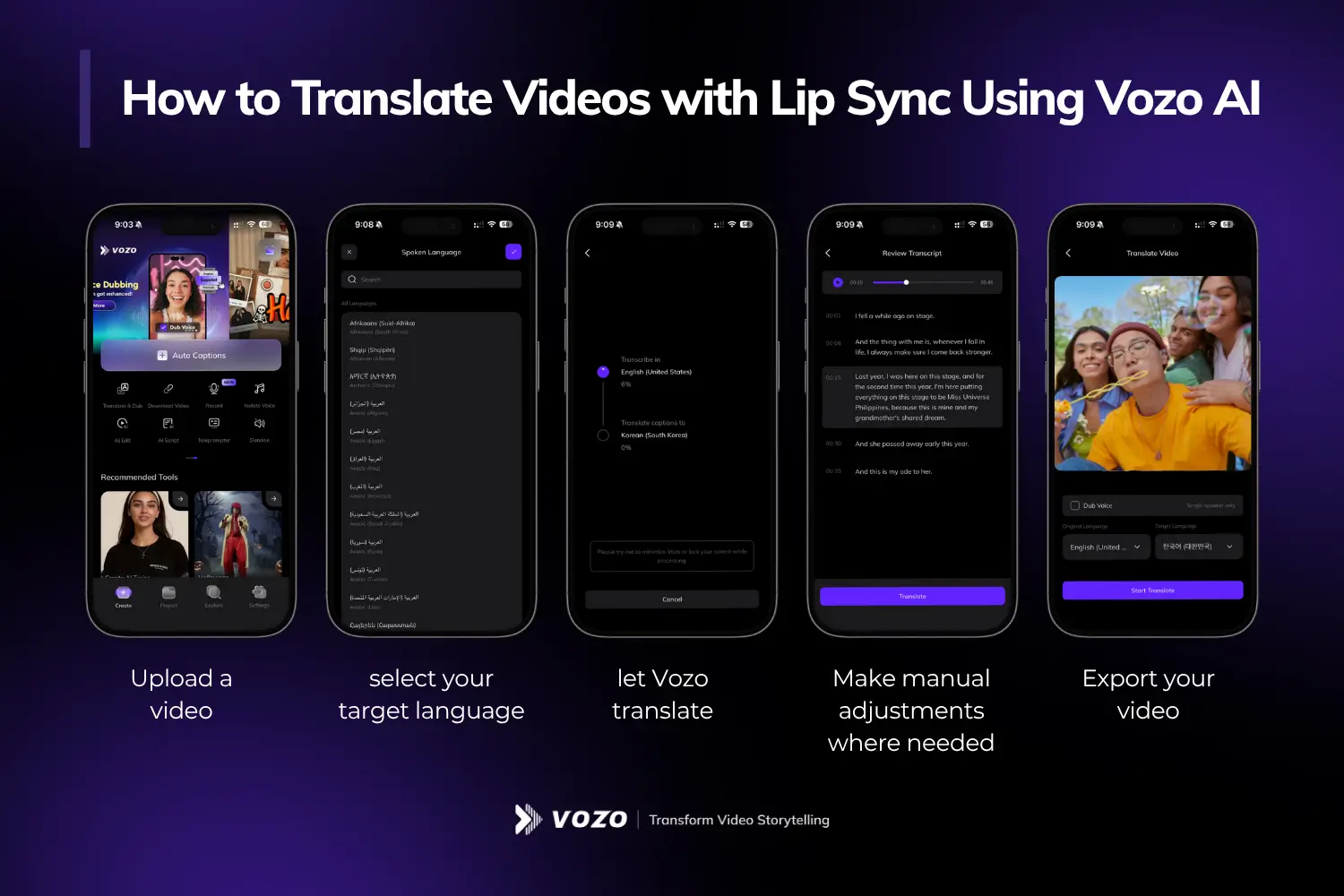

How to Translate Videos with Lip Sync Using Vozo AI

Vozo AI makes it easy to translate videos with lip sync using one simple timeline editor. Everything including translation, voice cloning, lip sync and subtitle editing happens in one place. You upload a video, select the target language and let Vozo’s AI video translation engine do the heavy lifting.

At the core of the platform is LipREAL™, Vozo’s self-trained model for extremely precise lip sync. It adapts to complex speech patterns, unique facial shapes, beards, masks and off-center camera angles with precise control, at a frame-by-frame level. This makes sure every lip movement matches the new dubbed audio, even in multi-speaker scenes, with both human and avatar models.

Vozo’s AI video translator also gives you control where it counts. You can:

- Use a translation glossary to lock in brand terms.

- Adjust pronunciation at the word level.

- Match tone and emotion using realistic ai voices.

- Add subtitles with smart timing and clean line breaks.

If you’re looking to translate and dub videos that feel natural in every language, Vozo brings all the right tools together and makes them so easy to use.

How Vozo AI Translates Videos with Lip Sync

1. Detecting Speech and Segmenting Audio

The first step in any video translation is isolating the spoken words. Advanced tools scan the video file to detect who’s talking, when they’re speaking, and where each sentence begins and ends. This is especially important when you’re working with group conversations or interviews in multiple languages.

2. Translating and Cloning the Voice

Next, the system applies AI-powered video translation to convert the speech into the target language. But instead of using generic voiceovers, it generates a natural-sounding voice clone that mirrors the tone, rhythm and energy of the original speaker. This makes it feel like the same person is speaking, just in another language.

3. Aligning Lip Movements with Translated Audio

Once the new dubbed audio is ready, lip sync AI aligns it with the speaker’s lip movements. This means adjusting the mouth frame-by-frame so that every syllable looks like it’s being said naturally. Vozo uses advanced AI to automate this process in just a couple of minutes.

4. Managing Multi-Speaker Scenes

In scenes with more than one speaker, the AI video translation tool assigns the right audio track to the right face. It tracks who’s talking and applies unique timing, lip sync and voice cloning technology for each person, preventing overlaps or mismatching.

This step-by-step process allows you to translate video with lip sync that looks and feels completely real, across any language, setting, or audience.

Features to Look for in a Video Translation Tool

Not every video translator delivers natural results. To translate video with lip sync that actually connects, you’ll need more than just basic AI translation. Look for tools that do more than just word replacement and actually preserve performance.

High translation accuracy is the baseline, but emotion matters too. Choose tools that mirror the speaker’s tone, not just the script, to make it look and sound natural.

Support for multi-speaker scenes is another must-havel. The best AI video translation tools assign dubbed audio to the right face automatically, with individual lip sync alignment. And if you’re currently only using single-face videos, it’s still worth considering, because you never know when you might need to branch out.

Voice cloning must replicate the tone, pitch and accent. Flat voiceovers break that immersive feeling that you really want your viewers to have.

Bonus features like subtitle alignment and text-in-image translation are a great help for keeping all parts of the video consistent and easy to follow, especially for multilingual content.

Getting the Best Results with Vozo

To get the most accurate results from Vozo’s AI video translation tool, start with the basics: a video with clean visuals and crisp sound. Clear audio, stable lighting and front-facing speakers help the lip sync process detect motion and speech correctly, which goes a long way towards making speech sound natural and eliminating language barriers.

Next, set your translation preferences inside the editor. Lock pronunciation for brand names using the glossary, then apply voice cloning and lip-sync options to maintain the same tone and emotion. This is how Vozo creates realistic AI performances that don’t feel dubbed.

Before exporting, preview your video localization frame by frame. It may take a bit more time, but it’s worthwhile. You can fine-tune audio and video alignment in real time to match speech, expression and subtitle flow.

Vozo’s AI-powered translation makes it effortless to automatically translate your video into multiple languages, helping you reach a wider market with localized content that resonates.

Ready to go global? Upload your video and start to translate videos with lip sync with AI precision today. Try Vozo’s full editor with a free trial and make your videos accessible worldwide.

Translate Videos with Lip Sync FAQs

How long does video translation with lip sync usually take?

It depends on the video length, number of speakers and how many languages you’re targeting. A short clip may only take a few minutes, while longer marketing videos with multiple voices might take an hour or two to fully translate and dub. Still faster than doing it manually!

Do lip sync translation tools work on noisy or low-quality videos?

Yes, but your results will be better with clear audio. That being said, most advanced AI video translators can isolate voices, clean up background noise and still guide accurate lip sync using facial tracking, even if the original recording isn’t perfect.

Can I translate videos that include multiple people speaking at once?

You can. Vozo’s online video translator tracks each speaker, applies a unique voice clone and adjusts the lip movements to match the timing. It’s designed to make interviews, panels and group contenteasy to understand and seamless.

Is lip-synced translation better than subtitles?

Subtitles are helpful, but they shift focus away from the speaker. A properly translated video with lip sync keeps the viewer engaged with the face, tone and timing, all in their native language.

Back to Top: Translate Videos with Lip Sync | How to Translate Videos that Look and Sound Natural